Python program to download files recursively from AWS S3 bucket to Databricks DBFS

How to enable a single public IP for outbound traffic from Databricks Cluster ?

Key points to be considered before choosing a modern Industrial IoT Platform

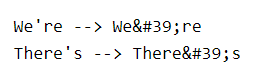

How to handle URL encoded characters in a dataframe ?

How to Enable or Disable public access of an Azure Blob Storage (Storage Account) using Python Program ?

How to enable and disable SFTP on an Azure blob storage using a python program ?

How to print Azure Keyvault secret value in Databricks notebook ? Print shows REDACTED.

How to migrate the secrets from one Azure Keyvault to another Keyvault quickly ?

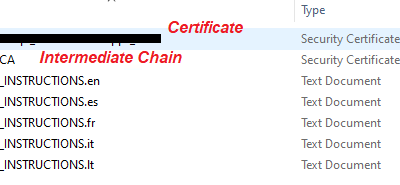

Error from Postman: Unable to verify the first certificate

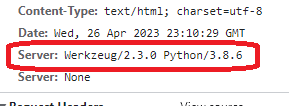

How to remove the Verbose Server Banner from Python Flask Application ?

AWS, CentOS, cloud, RHEL, ubuntu

Where is the location of the bootstrap user-data script within an AWS EC2 instance (Linux) ?

How to convert or encode a file into a single line base64 string using Linux command line ?

How to delete an AWS secret immediately without recovery window ?

How to list the AWS EC2 instances in an account using AWS CLI ?

AWS KMS Key Alias not working in IAM Policies

GitLab Self Hosted Runner : ERROR: Job failed: prepare environment: Process exited with status 1.

AWS Lambda Error No module named ‘psycopy2._psycopg’

How to synchronize a remote yum repository in CentOS or RHEL ?

How to change the date format in a python pandas dataframe ?

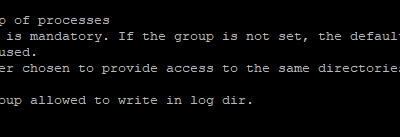

How to change the user of php fpm server in CentOS / RHEL / Rocky Linux ?

How to log all the SQL queries generated by WordPress ?

How to install python packages in Databricks notebook ?

How to monitor the performance of FastAPI applications ?

Add Directory option missing in ADLS / Azure Storage account container

How to restrict the access of a user / service principle to a specific folder inside ADLS – Azure Data Lake Storage / Blob Storage ?

Unable to publish Azure Data Factory – ADF pipeline – Authorization issue

New Laptop hard drive not getting detected

Cost effective way to back up data from mobile phones and computers